5 tips to help detect fake AI-generated content

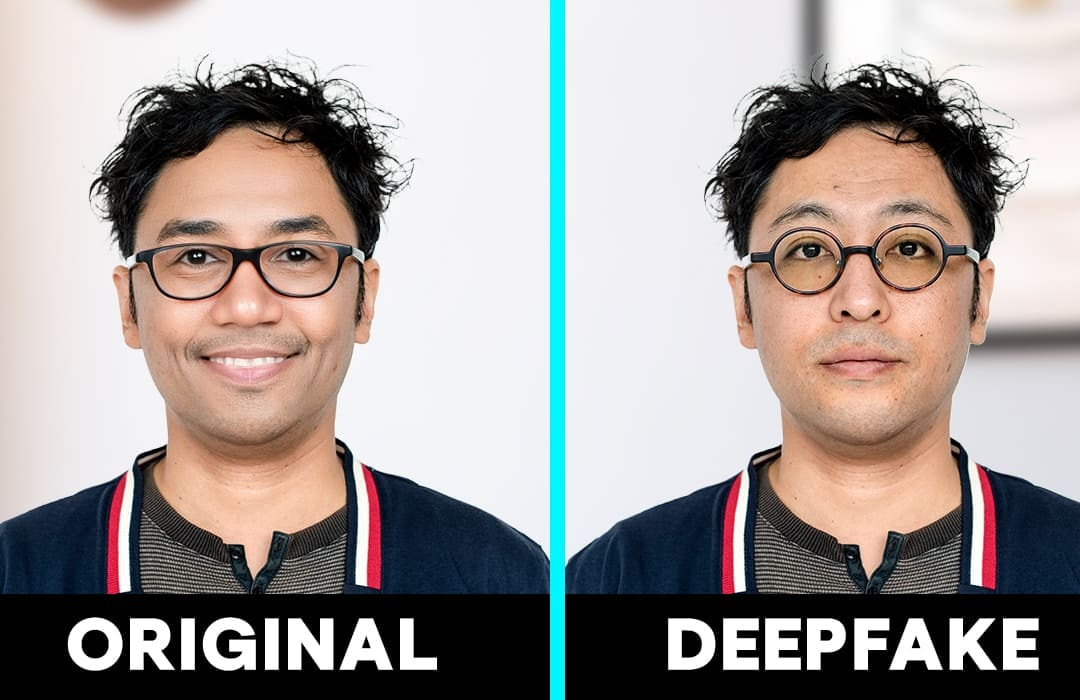

AI-generated videos have exploded in recent times, blurring the lines between real and fake. To avoid being fooled, here are some simple tips to help you distinguish between trustworthy content.

As AI advances at breakneck speed, it’s becoming increasingly difficult to differentiate between what’s real and what’s fake online. Not just still images, but AI-generated videos are also exploding thanks to tools like OpenAI’s Sora and Google’s Veo. This makes distinguishing fact from fiction a huge challenge.

Currently, AI-generated video detection software is limited, except for those focused on detecting deepfakes. So we are largely forced to rely on our observation skills and personal acumen. Here are some key signs that can help people recognize an AI-generated fake video.

1. AI-generated fake videos often don't follow the laws of physics

One of the simplest ways to spot a fake AI-generated video is to see if it obeys the laws of physics. In the real world, glasses don’t levitate, liquids don’t pass through solid objects, but with OpenAI’s Sora, these absurdities can happen.

Even OpenAI admits that Sora doesn’t accurately simulate basic interactions like broken glass. When watching videos, pay close attention to both the main action and the background details to spot any anomalies.

Also, listen to your intuition. If something feels “off” to you, even if you can’t quite put it into words, it could be a sign that the video was AI-generated.

2. Observe carefully the reactions of the world around you.

The reaction of the surrounding world is also an important sign to recognize AI videos. In real life, taking a bite of an apple should make it disappear, but in Sora's world, that is not necessarily the case.

OpenAI acknowledges that interactions like eating sometimes don’t produce the exact same changes to the state of the object. So when watching a video, you need to pay attention not only to the action but also to the reaction that comes with it, that is, the physical consequences, the changes to the environment or the object.

For example, a person walks on sand but leaves no new footprints or water is spilled on the floor but the floor remains dry without any stains.

3. See anything unusual in the video?

Have you ever watched a video and felt “something is wrong” but couldn’t immediately put your finger on it? That’s a common experience when watching AI-generated videos.

The anomalies can be obvious, but sometimes it’s just a fleeting, vague detail that makes you stop and ask yourself: “What moment made me feel uncomfortable?” It’s these small details that often reveal inconsistencies in the operating logic of the virtual world that AI builds.

For example, in one video, we see a human hand carefully painting a cherry blossom branch. The brush strokes move continuously, the painting gradually appears, but strangely, the color of the brush strokes suddenly changes, even though the brush has never been dipped back into the palette. This is a typical example of “subtle errors” that viewers need to be alert to notice.

4. Try “reverse tracing” by recreating the video

Another approach to help users assess the authenticity of AI-generated videos is to try “backtracking” by recreating the video.

Enthusiasts of the Midjourney image-to-text generator have been experimenting with a “reverse-tracing” approach. They closely observe videos generated by Sora, then rewrite descriptions that are close to the original footage and feed them into the Midjourney tool to create still images.

The results show that in many cases, Sora’s videos are just “moving Midjourney photos.” This shows the close connection between image generation and video generation models, and raises questions about Sora’s true level of creativity.

If you want to tap into more of your senses when analyzing a video, you can try something similar. Imagine the description you believe might be the scene, and run it through a text-to-image tool to see how similar it is.

Next, do a Google search to see if the footage comes from any public data. This method isn’t perfect, but it’s a useful “compass” when determining whether you’re looking at a genuine video or an AI creation.

5. Always be alert and update your technology knowledge

AI technology to create videos from text is still in its infancy, but its development is extremely rapid. In just a short time, AI models can create highly detailed videos that are difficult for viewers to distinguish between real and fake.

This means that the risk of being fooled by AI-generated content is increasing. The best way to avoid becoming a victim is to learn to “evolve” with technology, which means always updating your knowledge, monitoring new tools and practicing your image and audio analysis skills./.