How to detect deepfake images created by artificial intelligence

(Baonghean.vn)- Detecting deepfake images is becoming more and more difficult as artificial intelligence (AI) technology continues to develop. However, there are some warning signs that can help you determine whether an image is a deepfake or not.

Deepfakes are images or videos that have been edited using AI technology to make viewers believe something that is not real. Deepfakes can be used for a variety of purposes, including entertainment, fraud, and propaganda.

The creation of fake images or videos using AI technology is quickly becoming one of the biggest problems we face online. Fraudulent images, videos, and audio are on the rise due to the proliferation and misuse of Generative AI tools.

With the emergence of deepfake videos created by artificial intelligence almost every day, from images of world-famous figures such as singer Taylor Swift, former US President Donald Trump,... distinguishing between real and fake is becoming increasingly difficult.

Today, innovative tools that use AI to generate videos and images from text like DALL-E, Midjourney, and Sora make creating deepfakes easier than ever, users just need to type in a request and the system will automatically generate them.

These fake images may seem harmless. But they can be used to commit fraud, steal identities, or spread propaganda and manipulate elections.

In the early days of deepfake technology, it was limited and often left obvious signs of manipulation. Fact checkers pointed out images with obvious flaws, like a hand with six fingers or glasses with two differently shaped lenses.

But as AI improves, detection becomes much harder. Henry Ajder, founder of consultancy Latent Space Advisory and a leading expert on generative AI, said some widely shared advice, such as looking for unnatural eye blink patterns in deepfake videos, no longer works.

However, Mr. Henry Ajder said, there are still some signs to recognize deepfake images, such as a portrait of a person with an electronic gloss, “an aesthetic smoothing effect” that makes the skin “look surprisingly shiny.”

Also, check the consistency of shadows and lighting. Often the subject of the photo will be clearly in focus and look convincingly real, but elements in the background may not be realistic or glossy.

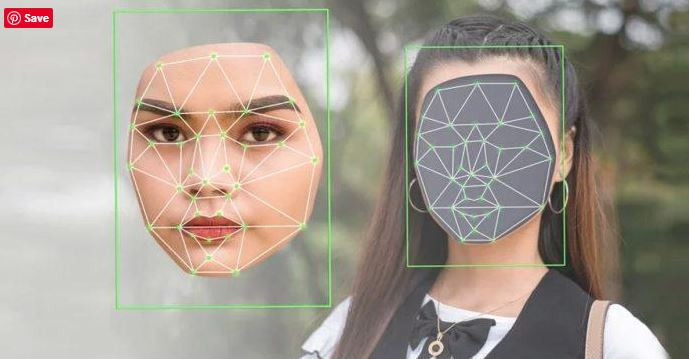

Look at the face

Face-swapping is one of the most popular deepfake methods. Experts recommend looking closely at the edges of the face. Does the skin color match the rest of the head or body? Are the edges of the face sharp or blurry?

If you suspect a video of a person speaking has been edited, look at their mouth. Do their lip movements match the audio perfectly?

Ajder recommends looking at their teeth. Are their teeth clear, or are they blurry and mismatched to their actual appearance?

The US cybersecurity company Norton said the algorithm may not be sophisticated enough to create individual teeth, so the lack of outlines for each tooth could be a sign to recognize a deepfake image.

Consider the issue in a broader context

Sometimes context matters. Take a moment to think about whether what you're seeing makes sense.

The US-based journalism and media education nonprofit Poynter advises that if you see a public figure doing something that seems “ostentatious, unrealistic, or out of character,” it may be a deepfake image.

For example, is the Pope really wearing a fancy puffer jacket like the famous fake photo? If so, will mainstream sources post more photos or videos of it?

Using AI to detect deepfakes

Another approach is to use AI against itself. Microsoft has developed an authentication tool that can analyze a photo or video to give a confidence rating of whether it has been tampered with, while semiconductor maker Intel's FakeCatcher uses algorithms to analyze the pixels of an image to determine whether it is real or fake.

There are also online tools that promise to sniff out fake content if you upload a file or paste a link to a suspicious document. But some, like Microsoft’s authenticator, are only available to select partners and not to the public. That’s because researchers don’t want to expose the information to bad actors and give them a bigger advantage in the deepfake arms race.

Public access to detection tools can also give people the impression that they are “godlike technologies” that can replace critical thinking for us, when instead we need to be aware of their limitations, Ajder says.

Experts say that putting the burden on ordinary users to become digital detectives could even be dangerous, as it could lead to blind faith when even experts are finding it increasingly difficult to detect deepfakes.

Detecting deepfakes is an ever-growing challenge, and no method is 100% effective. However, by taking precautions and raising awareness, we can reduce the risk of being fooled by deepfakes.