Education is the foundation for mastering artificial intelligence?

Since ChatGPT made a global splash in 2022, AI has been redefining the role of labor, skills, and ethics in every field. Amidst the great opportunities and profound challenges, education is the fulcrum for each individual to not be swept away by the whirlwind of automation - but on the contrary, know how to use AI wisely, humanely, and responsibly.

Technological push and global warming

In November 2022, ChatGPT was launched and it took just a few weeks to reach 100 million users - a record-breaking rate of spread never seen before in the history of the Internet. By the end of 2023, according to the OECD's AI Incident Monitor, the number of AI incidents reported by the international press had skyrocketed 1,278% compared to the previous year, coinciding with the explosion of generative models. From content creation, programming, healthcare to public administration, AI is quietly redefining the role of humans: repetitive tasks are gradually being automated, while the skills of supervision, creativity and coordination with machines are increasingly required.

This explosion forced international organizations to urgently shape the “rules of the game”. On September 7, 2023, UNESCO published the first global guidelines on generative AI in education with a clear message: technology must be placed within a humanistic framework and respect for human rights, instead of pursuing pure performance. Less than 6 months later, on March 13, 2024, the European Parliament officially passed the AI Act – the world’s first legally binding law on artificial intelligence, classifying education, healthcare and recruitment as “high risk” and requiring all systems to be transparent and have human supervision at every stage. These timelines show that not knowing how to use AI has become a new disadvantage when competing in the digital age.

When society meets AI: 3 big bottlenecks

Despite its undeniable potential, AI comes with a number of challenges. First, the gap in digital literacy and skills has led many to view AI as a “magical black box.” Without the ability to ask the right questions or verify data sources, users are more likely to accept any results generated by the machine, contributing to the spread of bias.

Second, the vast data base that AI consumes is loaded with historical biases. Without ethical and legal capacity, we could face copyright infringement, privacy invasions, or massive emotional manipulation – risks that the AI Act warns against.

Finally, the structure of jobs is changing. AI is eliminating the need for many repetitive roles, while also creating new jobs like prompt engineers, model testers, and ethics monitors. The skills gap will widen if education systems don’t adapt quickly.

Education is the key

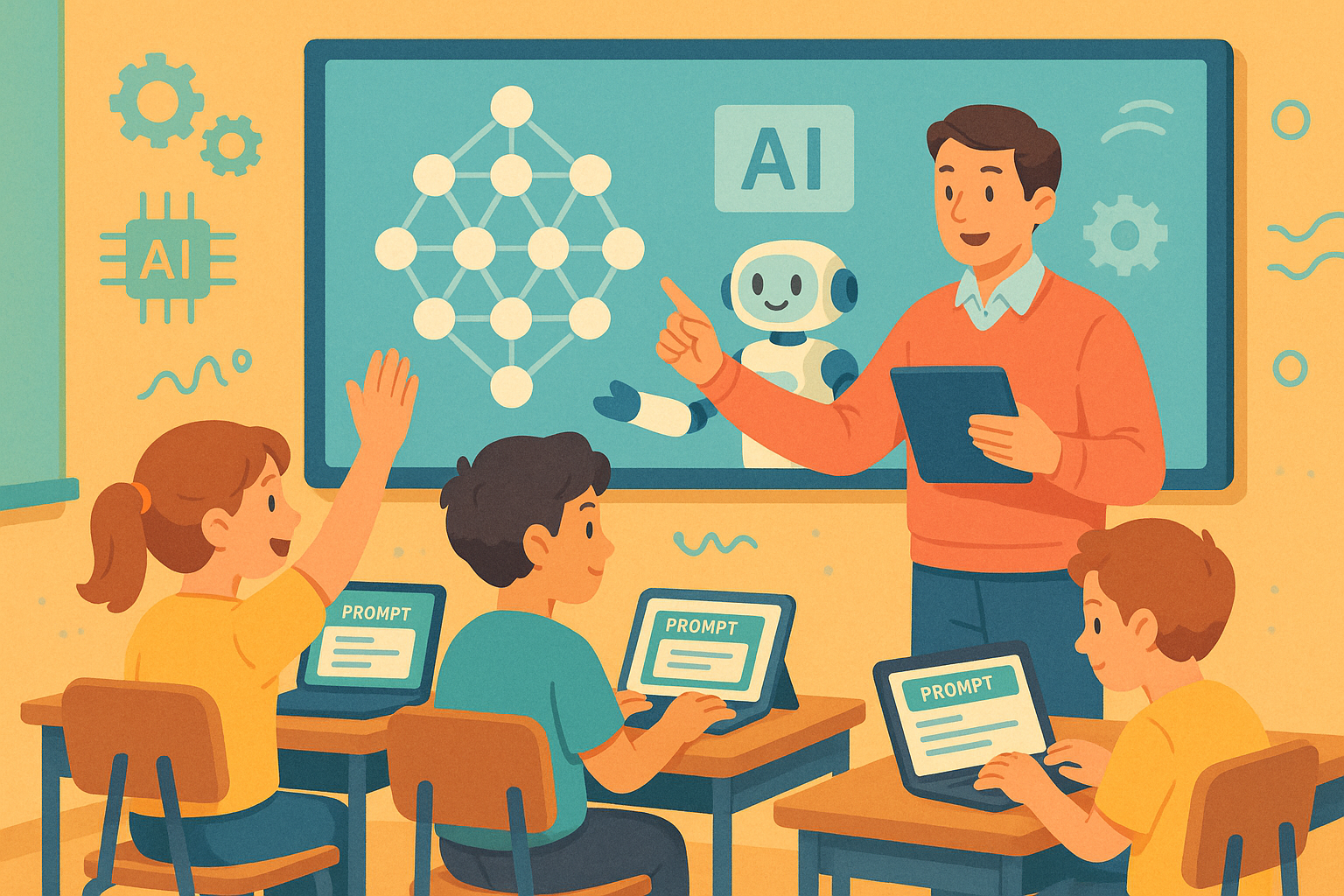

The common thread among all three is the need for universal AI literacy. UNESCO recommends that every curriculum, from primary to university, should teach learners “how AI learns,” data rights awareness, and how to use tools safely and ethically. The OECD recently announced plans to include a Media and AI Literacy (MAIL) assessment in the PISA 2029 survey; this promises to create a global benchmark for 15-year-olds’ AI proficiency.

In that picture, education plays a three-tiered role. At the basic level, the school’s mission is to move from “teaching to use computers” to “teaching to collaborate with AI”: understanding machine learning mechanisms, knowing how to set prompts correctly, and knowing how to evaluate and edit output.

At the advanced level, the program should incorporate real-life ethical scenarios – from essay-writing chatbots to deepfakes – to help learners practice analysis and responsible decision-making.

At the lifelong level, a network of short-term courses and micro-credentials helps mature workers continuously update their skills, especially in hard-to-automate competencies such as creativity, critical thinking, and multicultural team leadership.

Of course, content reform alone is not enough. Teachers must be equipped to become “learning architects,” harnessing AI as teaching aids while still maintaining pedagogical sovereignty. At the same time, e-learning databases—which record how students interact with AI—must adhere to the “right to explanation” principle as set out in the AI Act, ensuring transparency and privacy.

From Policy to Classroom: Models for Action

At the national level, an AI Competency Strategy for 2030 could set targets for: 80% of the workforce to complete a basic AI course; 100% of teachers to have access to UNESCO-standard materials; and all training institutions to have a mandatory AI ethics program. This framework would include a legal corridor for testing new technologies under supervision, and an AI education innovation fund to support schools and businesses to collaborate on solutions.

At the local level, Community AI Learning Hubs will provide equipment, connectivity, mentorship, and startup advisory programs. This model is especially useful in rural areas where the risk of “digital white areas” is highest.

At the enterprise level, the “twin-mentor” model – pairing a human mentor with an AI assistant – helps employees shorten the upskilling cycle. In parallel, “sandbox labs” allow AI to be tested on real processes but under strict supervision, turning internal data into a shared asset for learning.

The intersection of these three levels is interdisciplinary. When computer science students are forced to collaborate with colleagues from law, psychology, and design on their senior projects, they learn not only to write algorithms but also to assess social impact, considering inclusivity and sustainability.

Artificial intelligence is moving from the realm of “future technology” to become the default infrastructure of life. As that infrastructure penetrates every corner of society, our choices shrink: either take the initiative or be swept away. Education – with its function of transmitting knowledge, stimulating critical thinking and nurturing human values – is the dual foundation that ensures humans do not lose themselves in the whirlwind of automation.

Today, education empowers individuals to understand, question, and regulate AI. In the future, education will determine the quality of the “virtual colleagues” that society creates: a creative enabler or an uncontrolled force. No matter how powerful AI becomes, the journey to harnessing it will still begin in the classroom—where we learn to ask “why” before asking “how.”